Artificial Intelligence (AI) recruiting tools are saving hiring managers and recruiters time, but candidates are left worrying whether a machine learning algorithm is smart enough to secure them their next job.

A quick Google search for “AI recruiting tools” will yield a slew of listicles counting down the best artificial intelligence-powered talent acquisition software and “definitive guides” for human resources (HR) professionals looking to outsource some of their workload to automated systems. With remote work on its way to becoming the norm as a result of the pandemic, demand for AI-powered interview software has been on the rise, and in tandem, so have questions and doubts about the capabilities of artificial intelligence in gauging the competency and working habits of potential employees. Critics have argued that, much like the hiring processes carried out by humans, AI systems can introduce bias and produce inaccuracies. Are AI recruiting tools really ready?

The Truth About AI Recruiting

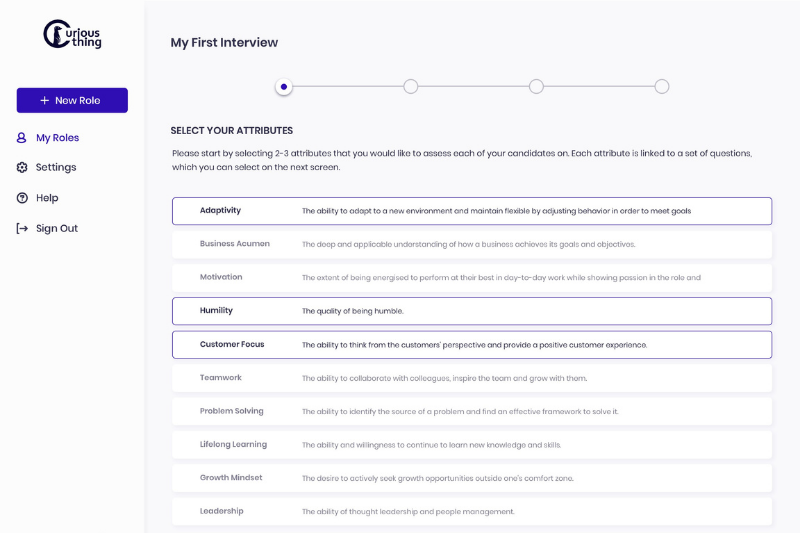

Earlier this month, the MIT Technology Review tested software from two companies specialising in AI recruitment, and found worrying findings that spelled out some concerns about the machine learning algorithms behind these softwares. One of the programmes, MyInterview, evaluated personality traits based on the Big Five personality test, a psychometric evaluation that considers openness, conscientiousness, extroversion, agreeableness, and emotional stability. The other programme, Curious Thing, evaluates other metrics such as humility and resilience. Clayton Donnelly, industrial and organisational psychologist at MyInterview, told the Tech Review that the algorithm evaluated the personality results of the “candidate” based on the intonation of her voice, rather than the content of the interview itself. However, Rice University professor of industrial organisation psychology Fred Oswald argues that intonation is not a reliable indicator of personality. “There are opportunities for AI or algorithms and the way the questions are asked to be more structured and standardised,” he says. “But I don’t think we’re necessarily there in terms of the data.”

AI-powered interviews have been touted as bias-eliminating machines devoid of preconceptions about candidates, but critics have also argued otherwise. Bias has been reflected in factors as trivial as appearance and as contentious as gender. An investigation published by Bavarian Public Broadcasting this February found that Retorio’s algorithm assessed candidates based on whether they had accessories such as glasses during the interview. To cite an instance of AI bias reflecting far graver implications, in 2018, Amazon discovered that its AI recruiting tool was dismissing female candidates. The system, which evaluated candidates based on historical hiring data over a ten-year period, taught itself that male candidates were preferable. The algorithm was fed recruitment data reflecting a male-dominated tech industry, and as such, the software accordingly learnt to penalise candidates that had attended all-women’s colleges and were part of women’s clubs and societies. The project was shut down as soon as it came to light that the algorithm was producing biased results.

Unknown Unknowns and Portability Traps

AI bias arises when the data collected misrepresents reality or reflects existing prejudices. The former might arise in deep learning systems that are fed more images of light-skinned faces than dark-skinned faces, which would result in facial recognition systems being unable to recognise people with darker skin. The latter is reflected in the aforementioned Amazon case, which is a result of historical hiring decisions that disproportionately favoured male over female applicants. There is also the issue of training the algorithm. Algorithms are tasked with evaluating traits or “attributes” that reflect a certain quality; for instance, to evaluate creditworthiness, algorithms are trained to look for age, income, or number of paid-off loans. The process of choosing which attributes the algorithm should consider or ignore can significantly impact the software’s predictive validity, which is why data preparation is known as the “art” of deep learning.

That said, mitigating bias is still a challenging process, which begins with identifying the root of the bias itself. In Amazon’s case, when engineers working on the project found out that the algorithm was penalising female candidates, they programmed it to ignore explicitly gendered words such as “women’s”, but soon discovered that the new system was picking up on words such as “executed” and “captured” — implicitly gendered words connoting aggression and militancy that were typically associated with masculinity. AI hiring tools also have “portability traps“, a term coined by postdoctoral fellow at the Data & Society Research Institute Andrew Selbst. “You can’t have a system designed in Utah and then applied in Kentucky directly because different communities have different versions of fairness,” says Selbst.

What are Businesses Doing?

While companies do recognise the potential in AI recruiting, concerns about it yielding discriminatory judgements have been enough to discourage full reliance on the technology. “Using AI in screening tens of thousands of applicant resumes has helped us cut total labor time by 75 percent,” said Tomoko Sugihara, director of recruitment at SoftBank Corp. That said, Sugihara says that HR personnel still go through resumes and interview recordings that the AI has filtered out.

In a bid to adapt to the coronavirus pandemic, Kirin Holdings Co. has decided to complete all hiring processes online to mitigate the risk of coronavirus infections, and will also consider utilising AI technology in future recruiting activities. The Japanese brewer is aware of the limitations of training deep learning algorithms with historical hiring data, and remains cautious about the new trend in talent acquisition. “At present, we are not using AI because it may only lead to accepting those that match a certain standard at a time when we are looking to hire diverse personnel,” said Kirin spokesman Keita Sato.

Institutional Response

If it is any consolation, regulatory bodies that oversee the operation of these AI systems do exist. Employment lawyer Mark Girouard says AI recruitment tools fall under the Uniform Guidelines on Employee Selection Procedures guidance. However, Girouard also notes that so long as a tool does not discriminate against candidates on the basis of race or gender, there are no federal laws requiring AI tools to meet certain standards of precision or accuracy. In its case against Utah-based video interview platform HireVue, the Electronic Privacy Information Center (EPIC) argues the company has failed to meet internationally recognised and endorsed standards for the use of AI stipulated by the Organization for Economic Co-operation and Development.

German AI video interview platform Retorio’s managing director, Christoph Hohenberger, says that the software is not intended to be the deciding factor in the talent acquisition process. “We are an assisting tool, and it’s being used in practice also together with human people on the other side,” he says. “It’s not an automatic filter.”

Related Articles

Future of Work Report: Will Remote Working Continue Post-COVID-19?

What APAC Can Learn From Iceland’s Four-Day Work Week

HR Automation: 5 Top HR Tasks You Should Automate