Microsoft’s AI news aggregator, MSN.com, has come under fire for racial algorithm bias after it mistakenly paired an article on Little Mix singer Jade Thirwall with a photo of her bandmate Leigh-Anne Pinnock, both of whom are mixed-race. The blunder comes a week after Microsoft announced mass layoffs of 77 editorial staff in a move to switch over to AI-powered news aggregation.

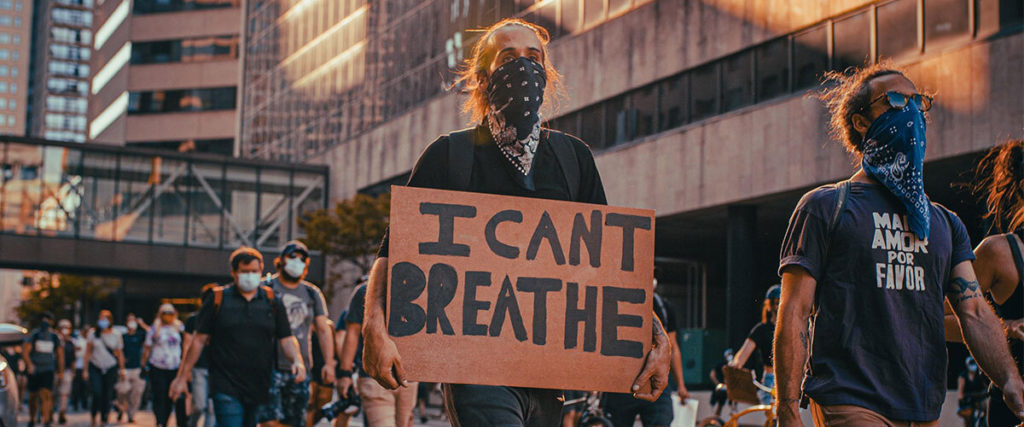

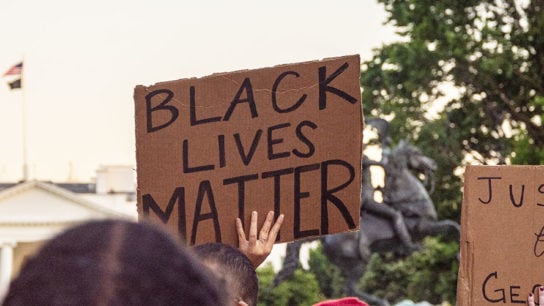

The resulting backlash comes at a time when nationwide protests to end racial discrimination and police brutality are still sweeping across America after an unarmed black man, George Floyd, died at the hands of police in Minneapolis during an arrest on 25 May.

The racial faux pas highlights the growing problem of machine learning bias where human prejudices are integrated into and amplified by the algorithms they create. A widely acknowledged issue in the tech sector, AI bias could have serious ramifications in perpetuating underlying prejudices such as systemic racism. For example, algorithms are up to 100 times more prone to misidentifying African-American and Asian faces, compared to Caucasian faces, according to The New York Times, which could cause a slew of false arrests, convictions, and imprisonments that disproportionally affect ethnic communities, if adopted by law enforcement agencies.

In response, IBM announced it was ending research and development of all facial recognition software earlier this week, citing concerns regarding “mass surveillance, racial profiling, violations of basic human rights and freedoms” in a letter to Congress. “Technology can increase transparency and help police protect communities but must not promote discrimination or racial injustice,” the company explained.

Two days later, Amazon implemented a one-year suspension on police use of their controversial facial recognition software Rekognition, stating, “We’ve advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology […] We hope this one-year moratorium might give Congress enough time to implement appropriate rules.”

Already, machine learning algorithms are being employed for decision-making in a myriad of key industries – an AI could help decide whether you are granted a bank loan, selected for a job interview, or given a shorter minimum prison sentence.

Previous calls for greater transparency in algorithmic processes have been met with resistance from leading firms – algorithms used by the likes of Google, Microsoft, IBM and Apple are often seen as valuable trade secrets that are the product of hours of in-house research and development. However, Harvard Business Review asserts that an extremely high level of transparency is neither necessary nor beneficial to the average user, given the vast amounts of data involved. Instead, responsible companies should simply “work to provide basic insights on the factors driving algorithmic decisions.” Illuminating consumer understanding of the factors should be the priority whereby key deciding factors are identified and shared – a sentiment shared by the GDPR’s mandated ‘right to explanation’.

As we become increasingly reliant on automated processes driven by algorithms, it remains to be seen the extent to which governments, corporations and individuals are willing to enact ongoing protective measures and regulations to ensure that technology does not continue to perpetuate systemic bias.

Related Articles

Twitch Sees Rapid Growth with 3.5 Million New Streamers YTD

Softbank Pledges $100M Diversity Fund in Wake of George Floyd Protests

VPN Downloads Surge in HK After Proposed Security Law Raises Alarms